Machine learning is used to enhance many industrial and professional processes. Such applications leverage classification or regression models deployed in real-world scenarios with real and immediate impact. However, these models, and especially deep learning models, require vast amounts of data to properly train. In most cases, the required data are collected from different locations and are thus independent of each other. An indicative example comes from electronic health record (EHR) patient-level data gathered from different hospitals referring to patients of different nationalities, socioeconomic status, etc. A common approach is to process the aggregated data in a centralized manner on a single server or device. Nevertheless, privacy concerns may arise with this approach, which may not comply with existing data protection laws, such as the General Data Protection Regulation (GDPR), increasing the demand for alternative decentralized solutions. The Federated Learning (FL) approach is a prominent solution to such concerns. This approach has been adopted by a number of industries, such as defense, IoT, and, pharmaceutics, etc. Client data are kept confidential since local models are trained individually in the customer’s premises and only the model parameters are sent to a central server. Model aggregation applied on the central server derives the global model, which is shared among a number of clients in contrast to the centralized training case. In such a scenario, the global model encapsulates the knowledge gained from each client individually, without disclosing private data.

Even though this approach is appealing and addresses the issue of data privacy in the general case, there are still limitations concerning the system’s security. Specifically, since the clients fully control their data and the model they receive, they have the ability to alter each of these components, namely changing the model parameters (weights, biases) and altering data labels, for instance by injecting fake data. Moreover, since the local and global models are exchanged through the network, adversaries could intercept communications and perform inference attacks, obtaining sensitive information. Consequently, a compromised client can intervene in the learning process of the federated system and perform malicious actions such as pattern injection and model degradation. These attacks can be data poisoning or model poisoning when private data or model parameters are altered, respectively. Additional effective security tools and mechanisms are thus needed to protect federated systems from data and model poisoning attacks. Recent research has been focused on developing variations of the aggregation algorithm that are tolerant to the appearance of malicious participants. Such approaches include mean aggregation, trimmed mean aggregation, etc. These techniques rely on the assumption that model updates originating from malicious participants differ significantly from those from benign clients. Thus, by using distance-based algorithms, the poisoned parameters could be detected and excluded from the aggregation process.

GANs are neural networks that train in an adversarial way and were introduced primarily for the task of image generation. Although they have since been tailored to many different scenarios, image generation is still an area of active research where new architectures and techniques are employed for tasks such as person generation, high-resolution image generation etc. Within federated systems, GANs have been used for either benign or malicious reasons. Regarding the first, the global model can be a GAN trained for the task of image generation in a federated manner. For the latter, the global model could be used as the discriminator by a malicious user to create a GAN model. This model could then be used to generate samples that belong to benign clients and formulate an inference attack.

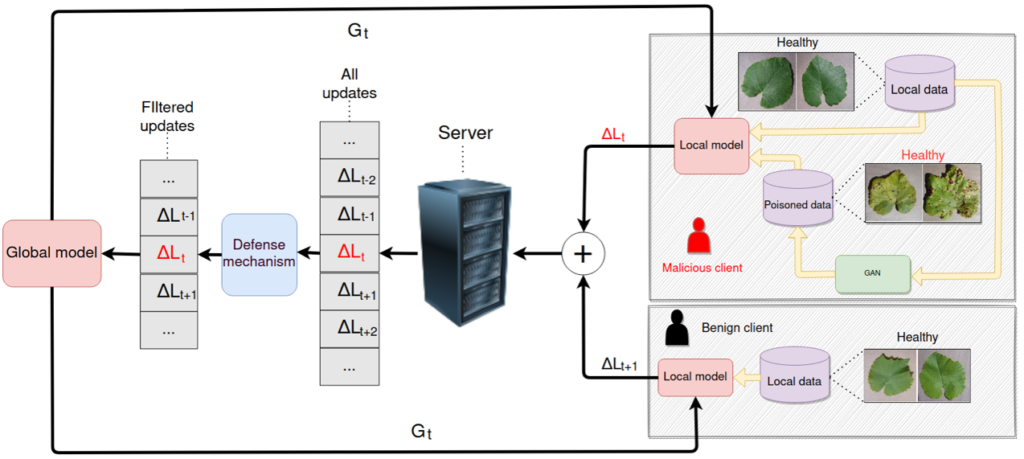

In the context of IoT-NGIN activities, a journal article entitled “GAN-Driven Data Poisoning Attacks and their Mitigation in Federated Learning Systems” has been published. In this paper, we introduce two GAN-based data poisoning attacks aimed at an image classification task in a federated learning system. We have experimented with diverse GAN architectures, in order to have them trained on very limited data and being able to create two label-flipping attacks: (a) one with the goal of global model accuracy degradation and (b) one with the goal of target label misclassification. We have proved through experiments that these attacks could not be mitigated through common defenses. So, we have proposed a moderation technique to mitigate these types of backdoor attacks in the FL system shown in the figure. We prove the effectiveness of our approach in the Smart Agriculture use case, where images of vineyards are used in crop disease prediction classification. In this use case, our method successfully reverts targeted label attack and increases accuracy within the model degradation attack case by 12%.

A detailed analysis of the approach and the results can be found in the full-text paper, available as open access.