Crop diseases can be in many cases predicted or early detected using micro-climate measurements (mainly temperature and humidity in the air, the leaves, and the soil), crop image processing, and visual analytics (see here). Within IoT-NGIN “Smart irrigation and precision aerial spraying”, crop diseases prediction will be experimented, utilizing:

- microclimate measurements acquired via Synelixis SynField® precision agriculture IoT nodes,

- images and real-time video analysis of the crop and the leaves captured from visual and multi-spectral cameras located on semi-autonomous drones flying over the orchard.

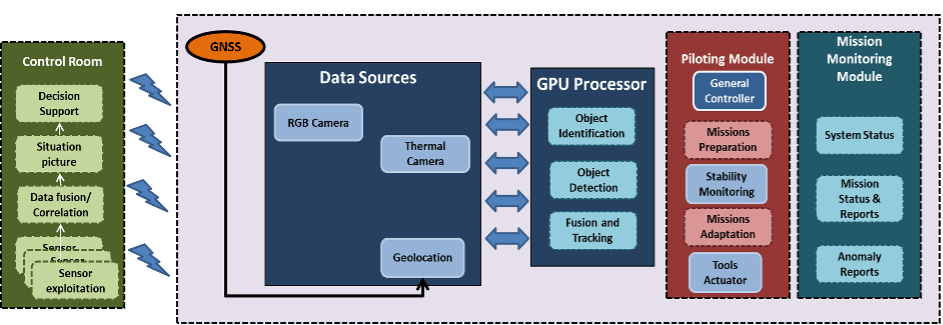

The ML-DRONE sub-project was accepted via the IoT-NGIN Project Open Call #1 in April 2022 and participates in the IoT-NGIN Smart Agriculture Living Lab. This use case aims to optimize precision aerial spraying based on real-time video analysis conducted at two levels: either locally (on the drone), based on already trained ML models, or remotely (at the edge) based on federated Machine Learning (ML). As a result of this ML processing, the drones are able to dynamically modify their trajectory, achieving optimal, precision aerial spraying limited only in areas of predicted/ detected disease rather than the whole orchard.

More specifically, ML-DRONE is a solution to port ML models as open-source embedded software on Acceligence CERBERUS drone system and then utilize it either as an IoT node with AI features or as an instantly deployed edge node. ML-DRONE evaluates the TensorFlow Lite and the TensorFlow Lite Micro systems, leveraging and expanding drone AI intelligence. The tool builds upon, combines, and expands existing embedded open-source implementations on the drone microprocessor system according to the needs and the resource availability. The final drone system, equipped with the AI module can be deployed, upon request, as an edge cloud resource in seconds, and via open APIs support additional IoT devices.

Acceligence’s ML-DRONE implementation is based on CERBERUS, a fully customized Unmanned Aerial Vehicle (UAV) which is the company’s best seller. CERBERUS is an octa-copter with the following characteristics:

- max thrust (nominal) 22.8 kg

- vehicle mass approx. 7.5 kg

- vehicle mass (batteries, camera & companion computer included) approx. 10 kg

- max take off weight (50% of max thrust) 11.4 kg

- dimensions (between opposite rotor shafts) 1.26 m

- flight time up to 40 min (depends on payload and wind)

- flight radius with radio control: max 1500 m, with waypoints: it depends on power consumption, payload, and weather conditions operating

- temperatures -10 to 45 °C.

Due to its optimized design, it has an extended flight time of up to 30 minutes, which is a substantial advantage when compared to conventional models currently available in the market, and a high-precision localization of 1cm using GPS-RTK2. The drone is able to accommodate the various hardware components (e.g., height/ distance sensors, cameras) in order to execute smart algorithms (e.g., visual object detection and collision avoidance services, algorithms for swarming), and generally to be easily adapted to the current operation by the user. In addition, CERBERUS UAV is one of the first UAV with a separate onboard computer with an embedded Jetson Xavier processor, running the latest version of Robot Operating System (ROS) in order to host Artificial Intelligence (AI) algorithms for object detection and identification for edge processing. CERBERUS Mission UAV is capable to perform on-board image processing, making use of machine learning-based techniques, and more precisely Deep Neural Networks (DeepNN) and Convolutional Neural Networks (CNN) exploiting the data (e.g., 2D/ 3D images/ point clouds) obtained from the various aforementioned sensory inputs.

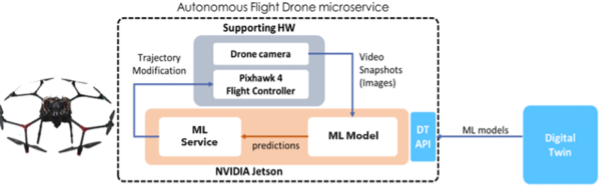

Figure 1: CERBERUS Mission UAV high level architecture, © Acceligence Ltd.

The goal is to have an Autonomous Flying Drone (AFD), which is able to move autonomously within a field, inspecting various crops. The service is based on an ML model for object detection, which has been trained and stored in the MLaaS model storage. The model is still in early development and will be further enhanced with the availability of datasets from the pilot site. The AFD service is intended to run on the drone, together with the trained model. Based on the ML predictions regarding the detection of trees, the AFD service will trigger control actions on the Drone, leading to a change in the Drone flight trajectory, in order to avoid the potential collision, but also to be able to reach the set destination.