When it comes to heterogeneous computing, the combination of a CPU and a GPUs is probably the most used combination of very different architectures. Originating from games and media applications, they are nowadays used for all kinds of computations, because they allow massive parallelism. The number of GPU accelerators employed in high-performance clusters is close to 30% of the Top 500 list[1], and 7 of the 10 largest clusters utilize them.

It is evident that also in cloud and edge-cloud scenarios, the advantages of GPUs are desirable. However, not in all cases a physical GPU can be added to the installation and complex virtualization setups can be a challenge.

This is where a technology pushed in the IoT-NGIN project could come to play: Cricket is a software which allows the transparent execution of CUDA code on remote systems. This enables separating the CPU-intensive work from GPU-intensive work, promising more efficiency by means of higher utilization because of more flexible GPU allocations.

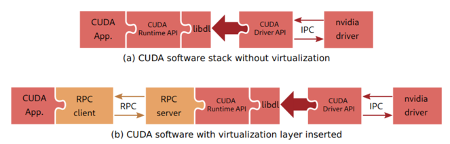

Cricket emulates a CUDA device and relays the GPU related functionalities to a different system containing a GPU. No recompilation is required, preloading the cricket library is sufficient.

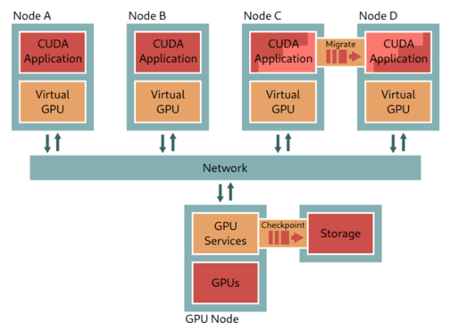

With this approach, a single GPU can be shared across multiple systems, as depicted in the following picture:

This scenario is especially relevant in the context of cloud computing, where often dozens of lightweight virtual machines are utilized, GPU access is hard, and CUDA drivers increase the footprint of the VM.

If you are interested in this technology, the following publications describe the technology in more detail:

- https://onlinelibrary.wiley.com/doi/10.1002/cpe.6474

- https://link.springer.com/chapter/10.1007/978-3-030-71593-9_13

And the code of Cricket can be found at:

References

[1] https://www.top500.org/